Gemini and I Wrote a Book: Introduction to Computational Linguistics

The Book

Let me kick off with the links:

The book has been generated with

The prompts are tailored towards a textbook but are generic otherwise. You can set any title you want and get your own book. YMMV. I ended up needing a lot of small LaTeX-related patches to get this specific book to compile. Those patches may not extend well to a different technical book. (In hindsight, it could have been done better. In the next version.) To edit the authors or the rest of the title page, adjust “template.tex.”

The Story

If I am feeling mentally fresh in the evening, I would typically do some work on my long-term hobby projects. If I am rather more tired, I would watch some seasonal anime or go fetch a drink maybe. This evening was borderline. Not quite up for an ongoing project, but up for a new project when the initial excitement gives that big motivational boost. And so here we are, I and Gemini, writing a book on computational linguistics.

The subject is easy to choose: there is some self-referential beauty in asking an LLM to write a book on computational linguistics. I draft a few design points for myself:

prompt and code separation;

human-readable caches for LLM queries on the execution path;

a wrapper around Google’s genai with a simpler API tailored for this specific project.

I start the project in Python because there is high uncertainty what the project will entail. Python has the richest ecosystem among interactive programming languages, and whatever need would arise in the project, there certainly will be a package for that. That being said, Python does not have Lisp macros. No matter how hard I try to compact an LLM query into a Python function, there is either some boiler-plate remaining or the syntax just looks weird. So I am going with two syntaxes: YAML for defining queries and Python for the rest.

Even if LLMs were deterministic, open source, and could run locally so that replication is guaranteed, one could still consider caching for efficiency reasons. Absent all of the above, caching is a self-evident requirement. When working in Python I have a habit of using .pickle for caching but this time around I am trying out YAML—so that I can easily browse with a text editor through past LLM queries and responses. (And no, this blog has not been written by an LLM. My em-dashes are my own.)

I get sidetracked. I have thought I would use YAML over TOML, because I expect a mixture of structured and text data and TOML insists on strict quoting of text data, which feels overly verbose in this case. What to say? Firstly, there are way too many YAML packages for Python. Secondly, YAML also needs to quote text, but only occasionally. That is just worse. So, for stylistic reasons and because I am getting fairly annoyed wrangling with the YAML packages, I am writing my own YAML-inspired data serialization format.

What feels like a year later, all that is done and shiny. And I can finally draft my first query. The YAML part is concise:

and so is the corresponding Python part:

To be fair, a simple “Write me a book on computational linguistics. You have a budget of 90,000 words.” would be just as good if not better. And if not today, then quite possibly tomorrow. But of course I want to cling to the illusion of being in control for as long as I can. And so I am meticulously drafting queries for chapters, for chapter outlines, for layout elements, etc. It’s also fun, I would not deny that.

Some time now into composing a set of queries to write a book. If I am to summarize my view on today’s LLMs in just a few words, they are knowledgeable but dimwitted. That is to say, LLMs have no notion of logic. They mimic the logic we project in our writing. That works surprisingly well at times. But the system is also finicky and very different computational pathways can get triggered by minute changes. Same logic in your query, same intent, just different phrasing, and the results can be very different. (Technically, that may simply be an over-fitting issue during model training). That also makes it extremely difficult for us, humans, to optimize the queries. Especially, if you are a little bit of a perfectionist, like I am, this inability to slightly tweak the prompt to get slightly better results can be genuinely frustrating.

Once I am at peace though that the results are what they are, I can lean back and enjoy the automation. Yes, it took me a while to get the system up and running and to have the first few parts of the first chapter done. But then, I just add a loop over the chapters, and for each chapter I add a loop over its parts. And 161 minutes later the whole book is written, at a cost of about 12 euros (excluding experimentation). All that remains is some manual coding to compile the results into a LaTeX document. Final statistics:

| Statistic | Value |

|---|---|

| Number of queries | 351 |

| Total duration | 161 min |

| Input tokens | 745,684 |

| Thought tokens | 905,582 |

| Output text tokens | 222,037 |

| Output image tokens | 15,480 |

Have I read the book? Is it any good? I mean, who reads books nowadays, let’s just ask Gemini.

Me: Attached is a preprint book on Computational Linguistics. It is written by a world-famous albeit controversial author. The title and the imprint pages have been redacted to keep the review fair. Read the book, write a one paragraph review. Be concise and objective in your assessment.

Gemini: This preprint offers a comprehensive and methodically structured introduction to the field of computational linguistics. It systematically builds from foundational concepts like formal languages, corpus analysis, and N-gram models to cover the core tasks of syntactic parsing, semantics, and machine translation. The book’s strength lies in its clear, didactic approach, using well-chosen diagrams and concrete examples to explain classic algorithms and the evolution of the field through its major paradigms, from rule-based systems to statistical methods. While the final chapter on Large Language Models and ethics acknowledges the current state-of-the-art, the text’s primary focus remains on providing a deep and rigorous grounding in the foundational techniques that predate the modern deep learning era, making it a valuable resource for understanding the historical and theoretical underpinnings of the discipline.

I have half hoped it would call me on my shenanigans...

In form, the book is inferior to a real textbook in multiple regards. To highlight the elephant in the room, or rather the absence thereof: literature references. I cannot think of a robust and legal way to add related books and papers. If you ask Gemini itself, it hallucinates. It is so bad at times, I stopped trying to ask it for any links whatsoever. Of all the organizations, Google should know their links, I would have thought. And, unlike some of the big AI labs out there, I cannot simply use a RAG with Anna’s Archive to fetch the relevant literature without any risk of legal repercussions. So, no references. (To be fair, on the subject at hand a lot of papers would be available publicly, but not on many other subjects.)

In fact, I have read the book from cover to cover. A good textbook author brings his own thoughts and a deeper understanding to the pages. Gemini does none of that. An analogy that comes to mind is my school essays. I would find sources, copy-paste some paragraphs, rewrite those in my own words so it’s not plagiarism, all the while making it worse for I haven’t got a single clue what I am talking about. But it could also be a simple matter of memory. I wonder, if I write a simple framework that lets Gemini do its own research into linguistics for a while, take notes, and then use those notes as a sort of memory when writing a book, will the book have a voice? Easy to do, of course. Tis but a lack of a research budget that stops me from trying.

The biggest trouble I had in retrospect? Orthography and syntax. If you give Gemini the freedom to write in Markdown and use LaTeX for formulas and tables, it goes wild. Obviously, there are single, double and backquotes. There are formulas with quotes inside, there are footnotes using Markdown syntax, there are footnotes using Unicode superscripts. The list goes on. If a Gemini ever submits a piece to The New Yorker, that piece will be failed on its orthographic shortcomings. I have given up midway through Chapter 2 in trying to write generic rules to tidy up the output. More specific prompts, more stringent validation, maybe another LLM pass just for the orthography, and maybe doing away with Markdown altogether will improve the results, but spending time on that feels like work rather than anything, so I am leaving quite a few artefacts as is in the text.

Closing Thoughts

In conclusion, what can I say? As I have mentioned already, this is good fun (artefacts included). My hands are itching to try new approaches and new titles. “The Rise and Fall of Rome: an Illustrated Primer” anyone? On a more serious note, and I am claiming nothing new here, most markets will be affected. Take economic consultancy, something I have been doing for a good part of my carrier. On their own, LLMs are not yet good enough to substitute for my typical workday. But they are already good enough that it is possible to envision how a combination of classical AI leveraged for planning, logical arguments, and standard data analysis tasks can, in combination with LLMs, make a system that does what I do. I need to start packing, I guess.

The way the economics and technology look now, there are massive economies of scale in the LLM business. Even more market power will be concentrated around a few technological firms. Policy capture will become a bigger problem still. Also, there might not be the next generation of critical thinkers coming out of schools and universities.

That dystopian scenario is sometime in the future though. Today, the competition is still strong, the products are subsidised, and for me, personally, the most exciting consequence is that we might be seeing the return of “made in my garage” era of the early internet. Not talking about this book making endeavour, of course, just contemplating more generally. An individual can now undertake hobby projects that previously would have required a whole team of people. Sure, the quality of the LLM output is “meh” at times, but the question is, is “meh” maybe good enough to bootstrap the final product? A stone knife still makes a decent spear.

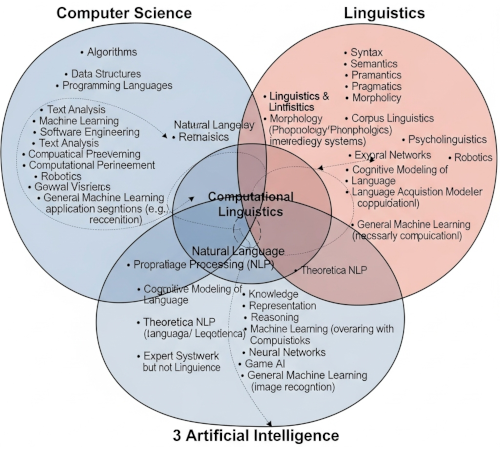

I do not know what consciousness is, maybe nobody does. But that knowledge is not necessary for constructing general problem solving algorithms. Numerous specialized algorithms exist, of course. So do a few general ones—propositional logic coupled with various searching techniques for big state spaces, Bayesian networks coupled with the Hamiltonian Monte Carlo—but these general algorithms fail at scales seemingly required for ordinary everyday tasks. The combinatorial complexity of selecting and processing information and then acting optimally, or rationally for that matter, explodes. Whether by virtue of evolution or simply due to an extremely unlikely happenstance, which would explain why we have not heard from the aliens, we humans are good at heuristics when solving problems. Heuristics help us to cut through the complexity. Still, those are only heuristics and they can fail and then your friends encourage you not to be a blithering idiot. So we use ensembles of disparate heuristics. One can argue LLMs also use heuristics for their reasoning, whether picked from human texts, or brought about with RL. They should also use ensembles thereof. A push for multimodal LLMs is partly there because those models can reach new markets, but multimodality can also prove beneficial for general reasoning. Anecdotally, I solve a lot of problems visually. As it stands now, and as is evident from the diagrams Gemini concocted, LLMs cannot yet do spatial reasoning. I would bet we get the next leap forward when that comes.

Set Operations are Difficult

So, here is a blooper for a Venn diagram illustrating Computational Linguistics as an intersection of Linguistics, Computer Science, and Artificial Intelligence. Don’t be distracted by the spelling mistakes by the way, we’ve all been there.